due dates: (see the rules)

- initial solutions and class presentations – March 4th

- final solutions and writeups – March 11th

The Design Challenge

The topic of this challenge is to create visualizations to help our colleagues in Educational Psychology interpret their Epistemic Frame Network data. Specifically, you need to address the problem of comparing two Frame Networks.

A detailed explanation of the data (and the problems the domain experts hope to solve) will be given in class on Thursday, February 18th.

This is a challenging problem for which we really don’t have a good solution yet. Our hope is that by having the class generate new ideas, we can find a bunch of new designs that may help them in both interpreting and presenting their data. Even though they have limited data right now, they are in the process of developing new tools that will generate a lot more data, so having good tools will be increasingly important. For your testing, we will also provide synthetic data.

The data is different than other data types seen in visualization. At first, it seems like lots of other network data. But these networks are small, dense, and weighted. Its not clear that standard network visualization methods apply. (and we haven’t discussed them in class yet)

The Data

(more details will be given in class on Thursday, February 18th)

An Epistemic Frame Network consists of a set of concepts. The size of the network (the number of concepts) we’ll denote as n. For small networks, n might be a handfull (5 or 6), large networks are unlikely to be bigger than a few dozen (20-30). Most networks we’ll look at are in the 6-20 range. Each concept has a name which has meaning to the domain scientist. (see the information from the domain scientist to really understand what the data means)

The data for the network is a set of association strengths. Between each pair of concepts, there is a strength that corresponds to how often the two concepts occur together. If the association strength is zero, the two concepts never occur together. If the number is bigger, the concepts appear together more often. The actual magnitude of the numbers has little meaning, but the proportions do. So if I say the association between A and B is .5, you don’t know if that’s a lot or a little. But if the association between A and B is .5 and between A and C is .25, you know that A is twice as strongly associated with B than C. The associations are symmetric, but they don’t satisfy the triangle inequality (knowing AB and AC tells you nothing about BC).

The numbers for a network are often written in matrix form. The matrix is symmetric. The diagonal elements (the association between a concept and itself) is not well defined – some of the data just puts zeros along the diagonal. So the matrix:

| 0 |

.5 |

.25 |

| .5 |

0 |

.75 |

| .25 |

.75 |

0 |

Is a 3 concept network, where the association between node A and B is .5, between A and C is .25, and between B and C is .75.

A more detailed explanation of what the data means may be provided by the domain experts. But you can think of association strength as “how closely related are the two concepts” (stronger is more closely related).

As an analogous problem, you can think of the network as a social network. The concepts are people, and the associations are how well they know each other, or how much time they talk to each other. A description of this problem (as well as this visualization problem) is provided on the SCCP page (single conversation cocktail party). (in the terminology of SCCP, what we get is the “interaction matrix”, not the “measurement matrix”).

As a practical issue, the data will be provided as “csv” (comma seperated value) files containing symmetric matrices. The matrices are small enough that the redundancy isn’t a big deal. The will usually be an associated text file with the names of the concepts. If the names aren’t provided, you can just refer to the concepts by letter (A,B,C, …). In fact, you might want to refer to them that way no matter what.

The Problem

The domain experts will explain what they want to do in interpreting the data. But the real problems are generally comparative: given 2 or 3 (or maybe more) networks, how do we understand the similarities and differences.

When comparing networks, you can assume they have the same concepts in the same order. In the event that one matrix is bigger than the other, you can simply pad the smaller ones with extra rows and columns of zeros.

Keep in mind that the data is noisy, has uncertainty, and some ambiguity (since the magnitudes don’t have meaning). What matters are the proportions between different observations. In fact, different matrices might be scaled differently. This matrix here:

is equivalent to the one above in the previous section.

It might be easier for you to think about the problem in terms of the cocktail party. In fact, we’ll provide you with a pile of example data from our cocktail party simulator. (we have limited real example data).

The Solution

First, I don’t think there is “THE” solution. There are probably lots of good ways to look at this data. Some good for some types of understanding, others good for other types.

How often have I said to you that when you have eliminated the impossible, whatever remains, however improbable, must be the truth? (Sherlock Holmes)

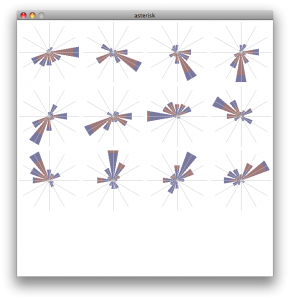

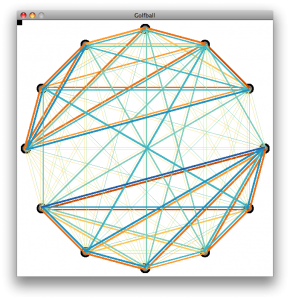

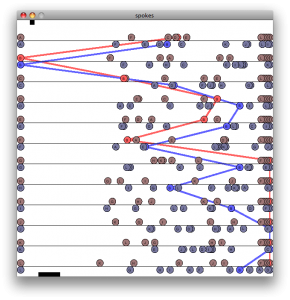

I told David (the domain expert) that the way I was going to find one good visualization was to generate 50 bad ones first. You can see a number of my attempts on the SCCP page. We will provide you with the sample code for all of these (except for the graph visualization solutions, which use a program I downloaded called “graphvis”). Our domain experts have also generated a few visualization ideas that they will show to you on February 18th.

Well, hopefully, we won’t need to generate 50 ideas. We’ll learn from the initial attempts and get to good answers quickly.

Your team will be expected to generate at least 1 (preferably several) possible solutions. Ideally, you will implement them as a tool that can read in matrices of various sizes so that we can try it out. However, if you prefer to prototype your visualization by drawing it by hand, that’s OK – please use one of the “real” example data sets though.

There is a need for a variety of solution types:

- static pictures (for putting into print publications) as well as interactive things

- tools for exploring data sets (to understand the differences between a set of networks), as well as tools for communicating these findings to others (where the user understands the differences)

It is difficult to evaluate a solution without really understanding the domain. That’s part of the challenge. You will have access to the domain experts to ask them questions. You can also think about things in terms of the SCCP domain (for which you are as expert as anyone).

The Challenge

The class will be divided into teams of 3 (approximately, since we have 16 people). We will try to assign teams to provide a diverse set of talents to each team. Hopefully, each team will have at least one person with good implementation skills for building interactive prototypes.

You will be able to ask questions of the domain experts in class on February 18th. If you want to ask them questions after that, send email to me (Mike Gleicher). I will pass the question along, and give the response back to the entire class (watch the comments on this posting).

Please do not contact the domain experts directly.This is partially to limit their burden, but also for fairness (some groups may have more access to them).

On March 4th, we’ll use the class period for each group to present their solutions to the domain experts and to discuss our progress. Groups will then get another week to write up their solutions. We’ll provide more details as time gets closer.

What to Create

Each time should create at least one (preferably more) visualization techniques for the ENF data.

You can devise tools for understanding a single network, but you must address the problem of comparing 2 networks. Its even better if you can come up with solutions for handing 3 or more networks. (but showing that you have a solution for the 2-way comparison is a minimum requirement)

Your approach should scale to networks with 20+ nodes in it.

It is best if you implement your proposed techniques so that they can load in data files. However, if you want to “prototype” manually (either drawing it by hand, or manually creating specific visualizations from some of the example data sets), that’s OK. You might want to do a simple prototype first, and then polish and generalize an implementation after.

For the demos (March 4th) you will be able to choose the data sets to show off your methods. For the final handins, we would prefer to be able to try out your techniques on “live” data. Ideally, we will give the tools you build to the domain experts and let them use them.

Designing tools that are interactive is great. For the demo, only you need to be able to use your tool (you will give the demo), but for the final handin, you will be expected to document what you’ve created.

I am aware that we haven’t discussed interaction (or network visualization) in class yet – this might be a good thing since I don’t want to cloud your judgment and have you just apply old ideas. Be creative!

Resources

Be sure to watch this page (and the comments on it) for updates and changes and more details.